In today’s fast-paced software industry, delivering applications rapidly and reliably across different computing environments is more critical than ever. Docker, a leading containerization platform, has revolutionized the way developers build, ship, and run software. This article explores the fundamentals of Docker and containerization, how they work, their benefits, and why mastering them is essential for modern software development and DevOps practices.

What Is Containerization?

Containerization is a form of lightweight virtualization where applications and their dependencies are packaged into isolated units called containers. These containers run on a shared operating system kernel but remain completely isolated from one another. This approach allows multiple containers to run on the same host without conflicts.

Unlike virtual machines, containers do not require a full operating system per instance. This drastically reduces overhead and improves resource efficiency.

Why Containerization Matters

- Faster Development Cycles: Developers can build, test, and deploy applications more quickly due to consistency across environments.

- Environment Consistency: Containers eliminate the “it works on my machine” problem by replicating the exact environment across dev, staging, and production.

- Better Resource Utilization: Containers are lightweight and use fewer system resources than VMs.

- Improved Scalability: Containers can be easily orchestrated and scaled horizontally across distributed systems.

What Is Docker?

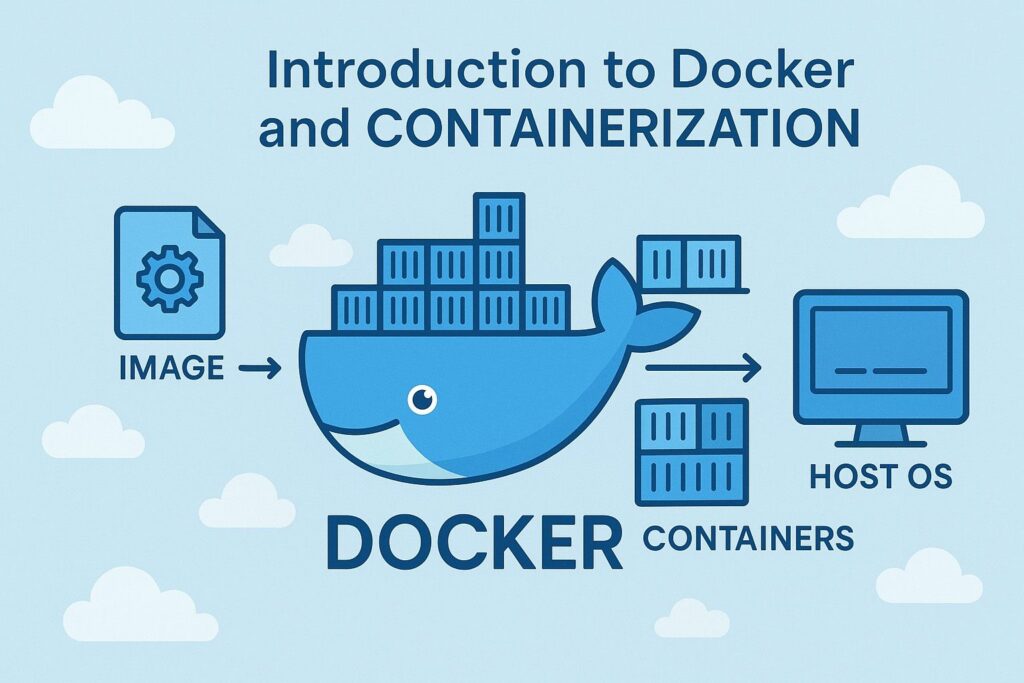

Docker is an open-source platform that simplifies the process of building, deploying, and managing containers. It provides a set of tools and services that allow developers to create containerized applications with ease. Docker uses a client-server architecture and supports container orchestration through third-party tools or native features.

Docker has become the go-to standard for containerization due to its robust ecosystem, developer-friendly CLI, and seamless integration with cloud platforms and CI/CD pipelines.

Core Components of Docker

1. Docker Engine

The Docker Engine is the runtime that runs and manages containers on the host system. It includes:

- Docker Daemon (

dockerd): Handles container operations like building, running, and monitoring. - Docker CLI (

docker): Command-line tool for interacting with the Docker Daemon. - Docker API: RESTful interface for programmatic control over Docker.

2. Docker Images

A Docker image is a read-only template containing the application code, dependencies, environment variables, and configuration files. Images serve as blueprints for containers and are built using a Dockerfile.

Images can be stored in local repositories or shared publicly or privately using Docker Hub or self-hosted registries.

3. Docker Containers

A container is an executable instance of an image. It has its own isolated filesystem, networking, and process tree. Containers can be started, stopped, restarted, and deleted independently of the image they are based on.

4. Dockerfile

A Dockerfile contains a set of instructions used to build a Docker image. It defines the base image, copies application files, installs dependencies, and specifies the startup command.

Example:

FROM node:18

WORKDIR /app

COPY . .

RUN npm install

CMD ["node", "server.js"]

5. Docker Hub and Registries

Docker Hub is the default cloud-based registry for Docker images. Developers can pull official images or push their custom images. Organizations can also use private registries for internal use.

How Docker Works

- Build Phase: A developer writes a Dockerfile and runs

docker buildto create an image. - Shipping Phase: The image is shared through Docker Hub or a registry.

- Run Phase: A container is instantiated from the image using

docker run. - Isolation and Portability: The containerized app runs consistently on any host with Docker installed.

Key Docker Features

- Layered File System: Docker images are built in layers. This allows efficient image reuse and minimal storage redundancy.

- Copy-on-Write: Containers can share layers, and changes are stored separately, improving performance and efficiency.

- Networking Support: Docker provides bridge, host, and overlay network drivers for inter-container communication.

- Volume Management: Volumes enable persistent data storage outside of containers.

- Logging and Monitoring: Docker integrates with logging tools and monitoring platforms for observability.

Common Docker Commands

docker build -t myimage .– Build an image from a Dockerfiledocker run -d -p 8080:80 myimage– Run a container in detached modedocker ps– List running containersdocker stop <container_id>– Stop a containerdocker rm <container_id>– Remove a stopped containerdocker images– List all available Docker imagesdocker pull <image>– Download an image from a registrydocker push <image>– Upload an image to Docker Hub or another registry

Docker vs Virtual Machines (VMs)

| Feature | Docker (Containers) | Virtual Machines (VMs) |

|---|---|---|

| OS Requirement | Shares host OS kernel | Requires full guest OS |

| Startup Time | Seconds | Minutes |

| Resource Usage | Lightweight | Heavy |

| Isolation | Process-level isolation | Full hardware virtualization |

| Performance | Near-native | Overhead from hypervisor |

| Portability | High | Moderate |

| Use Cases | Microservices, DevOps, CI/CD | Monolithic apps, legacy systems |

Real-World Use Cases

Microservices Deployment

Each microservice can run in its own container with its dependencies, making it easier to manage and scale independently.

Continuous Integration/Continuous Deployment (CI/CD)

Docker integrates with CI/CD tools like Jenkins, GitHub Actions, and GitLab CI to automate build-test-deploy workflows.

Multi-Cloud and Hybrid Cloud

Containerized apps can be deployed across multiple cloud providers without changing the codebase or configuration.

Local Development Environments

Developers can create reproducible environments on any machine without worrying about host dependencies or OS differences.

Legacy Software Modernization

Legacy applications can be encapsulated in containers, allowing them to run on modern infrastructure without major refactoring.

Benefits of Docker in DevOps and Modern Development

- Rapid Iteration: Changes can be tested and deployed instantly using containers.

- Environment Parity: Developers and operations teams use the same environments, reducing deployment issues.

- Automation: Infrastructure and deployments can be automated with Docker Compose and orchestration tools.

- Security: Docker includes features like user namespaces, seccomp profiles, and image scanning for vulnerability detection.

Conclusion

Docker and containerization have become indispensable tools for modern software development, enabling developers and DevOps engineers to build applications that are portable, efficient, and scalable. Containers simplify the deployment pipeline, enhance reproducibility, and reduce operational overhead by encapsulating everything needed to run an application in a self-contained unit.

As applications become more distributed and cloud-native, Docker provides the foundation needed to deliver robust, production-ready software across diverse environments. Learning Docker is a vital skill for any developer or engineer aiming to thrive in today’s dynamic software ecosystem.

I’m Shreyash Mhashilkar, an IT professional who loves building user-friendly, scalable digital solutions. Outside of coding, I enjoy researching new places, learning about different cultures, and exploring how technology shapes the way we live and travel. I share my experiences and discoveries to help others explore new places, cultures, and ideas with curiosity and enthusiasm.