As modern applications grow in scale and complexity, the traditional methods of managing software infrastructure have proven inadequate. Developers and operations teams require a robust, flexible, and automated platform to manage containerized workloads. Kubernetes is the solution that has redefined how software is deployed, scaled, and managed in the cloud-native era.

In this article, we break down how Kubernetes works in simple terms, explore its key components, explain its internal mechanisms, and highlight its real-world applications and benefits.

What Is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, management, and monitoring of containerized applications. Originally developed by Google and now governed by the Cloud Native Computing Foundation (CNCF), Kubernetes has become the de facto standard for managing containers in production environments.

At its core, Kubernetes allows you to define your application’s desired state and ensures that the actual state always matches it, automatically reconciling any differences.

Why Do Developers Use Kubernetes?

Kubernetes solves several problems in distributed application environments:

- Consistency across environments: Ensures the same code runs seamlessly across development, staging, and production.

- Automatic recovery: Restarts failed containers, reschedules workloads on healthy nodes, and maintains service availability.

- Scalable deployments: Dynamically adjusts the number of running containers based on CPU usage, memory, or custom metrics.

- Declarative configuration: Uses configuration files to define infrastructure as code for repeatability and version control.

- Platform independence: Kubernetes runs on public cloud platforms, private data centers, or even on a local laptop.

- Service discovery and load balancing: Automatically routes traffic and balances load among healthy containers.

Core Concepts and Architecture

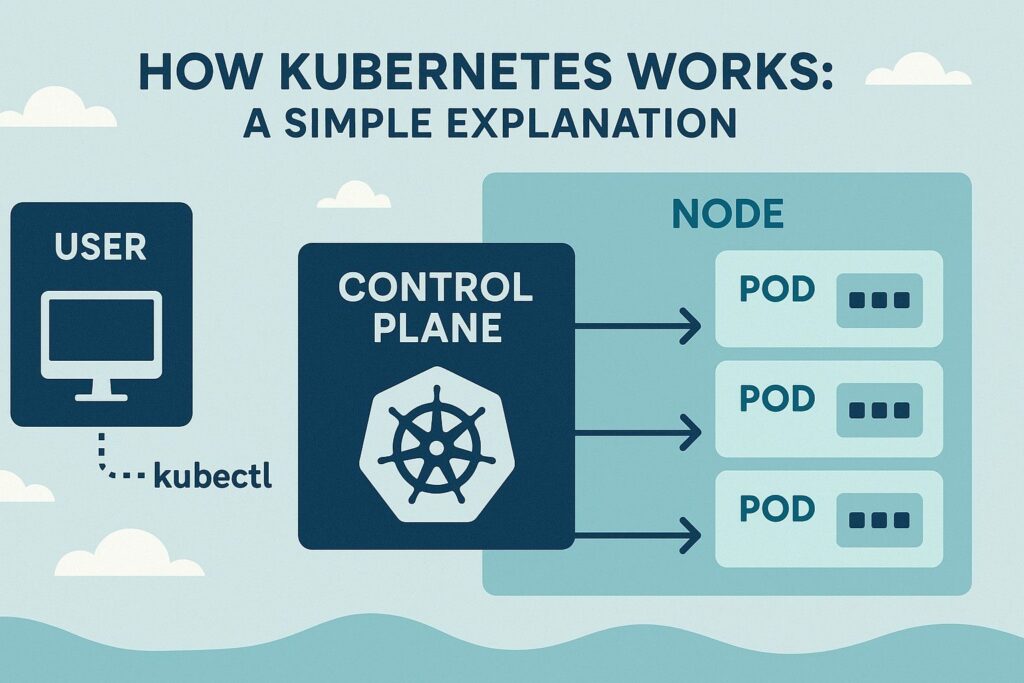

Kubernetes operates in a clustered architecture composed of two primary layers: the control plane and worker nodes.

1. Kubernetes Cluster

A Kubernetes cluster is a group of machines working together to run containerized applications. It includes:

- Control Plane (Master Node): Manages the cluster state and makes decisions.

- Worker Nodes: Run containerized applications in the form of pods.

2. Control Plane Components

The control plane governs the cluster and ensures the system runs according to the desired state.

- API Server: Acts as the central management point and entry interface. All

kubectlcommands and API requests are processed here. - Scheduler: Assigns new pods to nodes based on available resources, constraints, and affinities.

- Controller Manager: Maintains cluster health by managing node states, replica counts, and responding to events.

- etcd: A distributed, consistent key-value store used to store all cluster data, including configuration and secrets.

3. Worker Node Components

Worker nodes are the machines where containers are executed. Each node contains:

- Kubelet: An agent that runs on each node and ensures containers are running in their assigned pods.

- Kube Proxy: Handles network rules and routes traffic to the appropriate container.

- Container Runtime: The underlying software (e.g., Docker, containerd) that runs and manages container lifecycle.

Kubernetes Objects and Resources

1. Pods

A pod is the smallest deployable unit in Kubernetes. It encapsulates one or more containers that share storage, networking, and a runtime environment. Pods are ephemeral; if they die, Kubernetes will recreate them automatically.

2. ReplicaSets and Deployments

- ReplicaSet: Ensures a specified number of pod replicas are running at any given time.

- Deployment: Manages ReplicaSets, enables version control, supports rolling updates and rollbacks.

3. Services

A Service is an abstraction that provides a stable IP address and DNS name to access pods. Services ensure network connectivity even when pod IPs change.

- ClusterIP: Internal-only access.

- NodePort: Exposes services via static ports on each node.

- LoadBalancer: Provisions an external IP via cloud provider integration.

4. ConfigMaps and Secrets

- ConfigMaps: Inject configuration data into containers without rebuilding images.

- Secrets: Store sensitive data like passwords, tokens, and keys in encrypted form.

5. Volumes and Persistent Storage

Kubernetes supports temporary and persistent storage via:

- emptyDir: Temporary directory for pod lifecycle.

- PersistentVolume (PV) and PersistentVolumeClaim (PVC): Abstractions for using external storage (e.g., AWS EBS, NFS).

6. Namespaces

Namespaces enable resource isolation and quota enforcement for multi-tenant environments. Teams can run separate workloads in different namespaces within the same cluster.

How Kubernetes Works Step by Step

Let’s break down a typical workflow for deploying a containerized application in Kubernetes:

- Write a Manifest: You describe your desired application state using YAML (e.g., how many pods, what container image, environment variables).

- Apply Configuration: Use

kubectl apply -f deployment.yamlto submit the file to the Kubernetes API server. - API Server Validates the Request: Validates schema and stores the desired state in

etcd. - Scheduler Assigns Pods to Nodes: Based on availability and resource usage.

- Kubelet Executes the Pod: Downloads the container image and starts it via the container runtime.

- Service Discovery: Exposes the pod via a Kubernetes Service for other pods or external clients.

- Controllers Monitor and Reconcile State: If a pod crashes or a node fails, Kubernetes automatically takes corrective actions.

Real-World Applications of Kubernetes

- Microservices: Efficiently run and manage loosely coupled services.

- Big Data and AI Workloads: Run Spark, TensorFlow, and ML pipelines at scale.

- Edge Computing: Deploy lightweight Kubernetes clusters on IoT or remote devices.

- Blue-Green and Canary Deployments: Safely roll out new versions to a subset of users.

- GitOps and Infrastructure as Code: Sync configurations directly from Git repositories.

Kubernetes vs Other Orchestration Tools

| Feature | Kubernetes | Docker Swarm | Amazon ECS |

|---|---|---|---|

| Portability | High | Moderate | Tied to AWS |

| Ecosystem | Extensive | Limited | AWS-specific |

| Auto-scaling | Yes | Basic | Yes |

| Customization | High | Low | Moderate |

| Community Support | Strong | Weaker | AWS-supported only |

Key Advantages of Kubernetes

- Declarative Configuration: Simplifies deployment through reproducible manifests.

- Self-Healing Capabilities: Ensures high availability with automatic recovery.

- Horizontal Scaling: Adjust replicas based on CPU/memory metrics.

- Platform Agnostic: Supports all major cloud providers, VMs, and bare-metal systems.

- Modular Architecture: Extend Kubernetes with custom controllers, CRDs, and webhooks.

Challenges and Considerations

While Kubernetes offers powerful capabilities, it comes with some challenges:

- Steep Learning Curve: Mastering Kubernetes architecture and YAML syntax takes time.

- Resource Overhead: Managing control plane and node components adds operational complexity.

- Security Configuration: Requires careful setup of role-based access control (RBAC) and network policies.

- Monitoring and Logging: Needs integration with external tools like Prometheus, Grafana, and ELK stack.

Conclusion

Kubernetes is not just a container orchestration tool—it is a comprehensive ecosystem that empowers teams to manage modern, scalable applications efficiently. Its ability to automate operations, recover from failures, and scale applications makes it indispensable in today’s cloud-native development landscape.

By understanding how Kubernetes works—from pods and deployments to services and controllers—you can build resilient systems that deliver software faster and more reliably. As you gain more experience, explore advanced topics like Helm, custom operators, ingress controllers, and service meshes like Istio.

Kubernetes is the future of infrastructure management, and learning it is a valuable investment in your software engineering journey.

I’m Shreyash Mhashilkar, an IT professional who loves building user-friendly, scalable digital solutions. Outside of coding, I enjoy researching new places, learning about different cultures, and exploring how technology shapes the way we live and travel. I share my experiences and discoveries to help others explore new places, cultures, and ideas with curiosity and enthusiasm.